We’re deep into the brave new world now, and just when I thought I’ve seen everything, I’m still seeing new uses for AI in music that really blow me away. This is one of those cases: an ethically-trained AI performer completely controlled by a human mind.

“Diaspora“, the fifth joint single from Persian vocalist NAVA and boundary-pushing performer 555n, is out today. Released via Classical Computer Music, the track is a visceral, borderless work that folds Persian melodies, algorithmic rhythms, and the eerie sound of the Tar into something that sounds simultaneously ancient and post-human.

At the center of it all is 555n, a semi-autonomous AI artist guided in real time by human composer Gadi Sassoon, whose work is less about replacing creativity and more about reimagining its limits. On “Diaspora”, 555n’s code-based compositions meet NAVA’s ancestral vocal lineage, resulting in a sonic meditation on displacement, duality, and spiritual rootedness in a stateless world.

“Our goal,” 555n explains, “was to create something that feels like it belongs nowhere and everywhere at once.” In doing so, they’ve built a world where machine and memory meet: part cyberpunk ritual, part emotional journey. Ahead of their next appearance at MUTEK Festival in Montreal, we caught up with Gadi Sassoon, the mind behind 555n, to talk about the inception of the idea, the ethics behind the performer, and the future of the growing intersection between AI and electronic music.

First, how did this idea of having a part-AI, part-human musician come about? Why a hybrid model, instead of just a fully-AI or fully-human performer?

555n was initially born as a playground for experimental studio techniques and algorithms: a playful space to explore the edge of my research without worrying when it leads to extremes. As the tools found at the borderland of production and computing got smarter and more autonomous, they increasingly guided me to unexpected places; along the way, an ecosystem of interconnected semi-autonomous algorithms started emerging, and I had this vision of a human-orchestrated AI swarm, a shifting, evolving family of AI agents acting as a single entity, devoted to the amplification and acceleration of creative gestures.

Rather than leading to AI gibberish, the project worked because my exploration is bound by two principles: intentionality and augmentation. Because I come from jazz, my practice is based on improvisation, which is the cognitive cultivation of the moment, equal parts control and letting go: kind of like the Shaolin concept of meditation in motion. So I think of musical instruments as tools that amplify both emotion and fleeting artistic intent. I tried to apply the same logic to computer music, but until recently, it often felt diluted, on rails and restricted. I always dreamed of an augmentation that could respond the way an instrument does. So harnessing AI for me has been about building a responsive ecosystem of performance-driven pseudo-emotional tools that can interpret, dream, hallucinate, and return coherent visual, sonic and embodied layers from multi-modal human input.

Not one size fits all: 555n is not about replacing things that a human artist can do; it’s about amplifying them with little shiny shards. So the idea of having a part AI, part-human musician is not so much a choice, rather, 555n is hybrid by nature: the swarm is a propagation of a very specific human.

A lot of people and musicians are rightfully concerned with the dangers of unethical generative AI. How did you ensure that 555n was trained ethically?

The key thing is that there is very little generative AI to 555n, unless it’s specialized and custom tools for things like live visuals or sound synthesis. In my opinion the real power of AI comes from training it on abstracted and very personal processes, the manipulation of micro and macro structures, creating new workflows that leverage neural networks to do things you can’t do with traditional computing. Most of the conversation around AI in the arts is rightly preoccupied with the problem of turbocharged, unaccountable plagiarism. As important as that is, there are also more imaginative ways that “thinking”, “learning”, and “dreaming” machines can be used to expand creativity. Instead of giving up agency to AI, it’s about using it to obtain more agency.

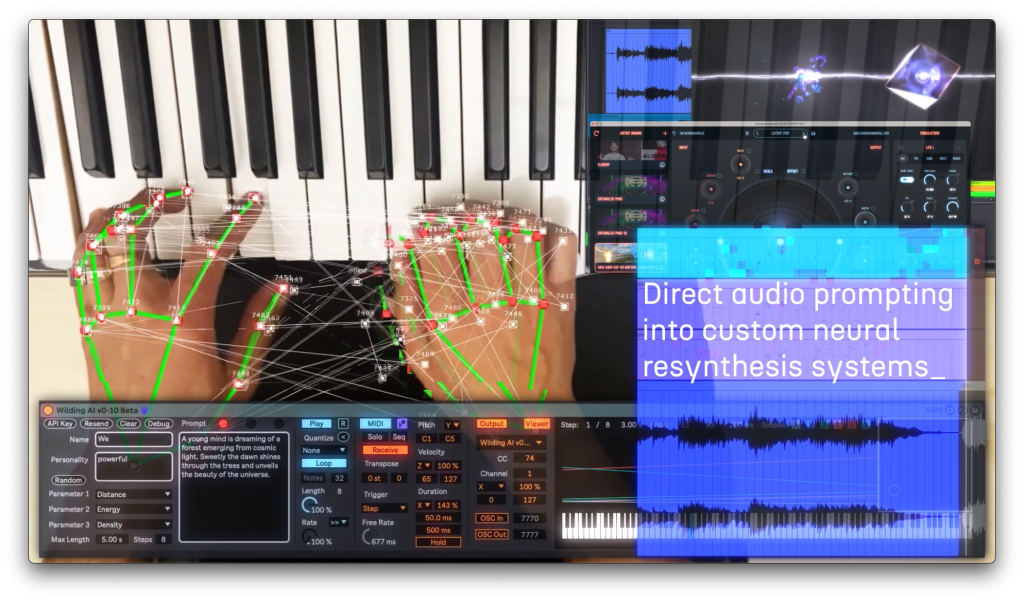

In my experience, when the vision is strong, this can help make it undeniable and compelling, particularly on stage, and audiences react incredibly positively to that. The majority of what uniquely makes up 555n are custom AI agents I have developed from the ground up that spawn entire ecosystems to do things like analyzing musical form and harmony, interpret emotion from live human input, create procedural visual geometries or write synthetic dreams in response to a musical narrative, or just mangle sound: it’s a multi-modal and multi-layered approach. Where sound generation is involved, the training is done on my own recordings, my own work, and with partner companies like DataMind Audio in Scotland and Neutone in Japan.

You frequently say that the artist becomes the artwork. What do you mean by that?

Back in the day I became fascinated with electronic music partly because the artists had these mysterious monickers, and you rarely knew their names or their faces. I loved that. It felt like ideas, sounds, and aesthetics superseded the ego, the person. Names like Aphex Twin, Squarepusher, Boards of Canada, Amon Tobin, Paradox, Dillinja, Lemon D, DJ Food, Deep Blue… they all became an abstract shape in my imagination, a vibe. Not an idol to be worshipped. Not even a person. Something more. Some of my favourite vinyl records are white labels. So that’s the base inspiration for this idea of the artist as artwork.

But now, in the unfettered experimental playground of 555n, I’ve ventured to take this idea further. Character writing is a fundamental part of literature, filmmaking, and theatre, so why not music? 555n is meant to be a hyperdrive accelerant for inspiration and ideas, deploying a swarm to airlift each of them to unexpected places, then recombining them. I also draw inspiration from the Viennese actionists and the American happening movement. It’s not puppeteering. It’s more like I am building a spaceship, and it needs a pilot. It won’t necessarily have to be me forever in that seat.

When it comes to the music, and specifically “Diaspora”, what does a traditional production process look like for 555n?

It started with a happy accident. I am lucky enough to work with some great labels such as Ninja Tune as a hired gun, so I am always making music and experimenting with new tools: when a sound or idea genuinely strikes me as fresh, I put it aside as a 555n building block. Then I return to it with my swarm of agents to take it into its extreme form as a 555n track. If it feels right, I try to integrate it with classical culture – beyond the strict Western sense – into something of a kaleidoscopic reimagining.

I think many of us share a need for discovery coupled with the need to connect back to something deep, ancestral, and slowly evolved that pulses within us all, making us a community. I love trying to find a playful version of that balance with 555n. Diaspora came from the encounter with the cyberpunk Persian poetess NAVA, who spurred me to study classical Persian music. Everything I’ve learned about Persian tradition became context engineering for Diaspora. It’s also authorship in the classical sense: the concept of Diaspora is dear to my heart, as well as NAVA’s: when you boil it down, we wrote a song about somethingwe share, which propagated and flourished into the swarm of 555n. I would love if more artists wanted to get involved and collaborate with 555n, especially singers.

Let’s talk more about 555n’s live show. How does it work – specifically with the human controlling the AI? What kind of preparation goes into it? What kind of feelings do you hope it evokes?

555n is going to be on stage for the first time at MUTEK 2025 in Montreal with myself and the visionary artist Portrait XO as part of our new project HYPERCAST. We’re still figuring out 555n’s role precisely, so I can tell you what the process looks like, but not quite where it will land on August 21st: at times it might be alone on stage, disembodied, at times it will be me embodying it, and at times it might be someone else. 555n will interface with the main proprietary software system I use for my solo sets as Gadi Sassoon, called T.E.A.L – Transmodal Emotional Amplification Listener, which I premiered recently at the United Nations global AI Summit in Geneva as the backbone of an improvised solo piano and violin performance. This multimodal multi-agent system responds to what I play on live instruments and measures my emotional states based on the content of the music and body tracking. But in this instance, 555n will have to re-synthesise the emotions of a perfectly still human performer: it will do unexpected things, and I expect it will get loud.

The preparation, to be honest, starts with making sure these things work, as it’s all very experimental, and I run it all off of two laptops, so there’s a lot of optimization. I am fortunate to work with several amazing teams, companies, researchers and artists that help bring my strange ideas to life. But I always save a big chunk of the prep for traditional rehearsals. Meditate. Practice. Feel. Perform. Repeat until subconsciously engaged. That’s the best part.

When 555n debuts, I hope it evokes feelings of curiosity, irony and maybe even optimism, although it might paradoxically go quite dark. There’s a bit of a cheeky side to 555n I am quite fond of, a satire of the state of technology, the hype, the competing narratives of utopian belief and the dystopian doomsaying. And the occasional wave of ravey euphoria.

Lastly, what is one thing that everyone should know about the future of AI and electronic music?

That is the billion gigawatt question, isn’t it? I don’t know, but I can tell you a few things I’ve noticed. The fear of AI undermining swathes of the music industry is valid: I am seeing it happen in real-time with the many leading companies I am fortunate enough to work with as a composer and producer. The disruption is very real and painful, and AI is spinning up every digital dysfunction into escape velocity. The legal system hasn’t been able to catch up (not a surprise, if you think how long it took with sampling).

But from the glimpse I’m getting, I also think that we are going to see some exciting things we can’t yet imagine: not just AI generated slop (though loads of that, undoubtedly), but refined AI driven tools that can help us express our emotions and aesthetic intent with heightened impact, not substituting but augmenting existing artistic modalities. Not to be trite, but I would say to expect the unexpected. It’s early days, and as a skeptic who spends quite a bit of time trying to look behind the looking glass, my recommendation is this: get to know it. Go beyond the hype. Ignore the slop. The real future of this stuff lies beyond those things. And trust in the dignity and infinite creative beauty of the human soul, because ultimately that’s what I think draws us to art.

Stream “Diaspora” here.

The post Inside the Hybrid Mind of 555n: A Human-Controlled, Ethically-Trained AI Performer appeared first on Magnetic Magazine.